Seven Questions for the Age of Robots

Jordan B. Pollack

Yale Bioethics Seminar, Jan 2004

Abstract

Jordan B. Pollack

Yale Bioethics Seminar, Jan 2004

Abstract

Humanity's hopes and fears about robots have been exaggerated by fantasy movies. Humanoid robots pander to our deep desire for (ethical) slavery. My own lab's work on automatically designed and manufactured robots has raised fears about "out of control" self-replication. Having worked on AI for 30 years, I can say with certainty that Moore's law doesn't apply to software design, and so I reject both the optimistic take-over-the-world view that AI is headed for a super-human singularity, as well as the pessimistic take-over-the-world view of a self-replicating electromechanical system.

Robots are just real-world machines controlled by algorithmic processes. The only machines which "reproduce" are the ones which earn a return on the investment in their design and production. Robots are not sentient and cannot obey Asimov's Laws. Today's most common robots are ATM's and ink jet printers, who cannot know that you stuck your finger in the wrong place! Yet these robots have collapsed the job markets for bank tellers and typists, respectively.

As robotic automation approaches biomimetic complexity, as the technology of bio-interaction improves, as broadband allows global telecommuting, and as the production of goods evolves away from centralized factories towards home replicators like the CD-Recorder, ethical issues DO arise. Even though Asimov's and Moore's Laws are irrelevant, should there be Laws for Robots? I will discuss 7 (±2) ethical questions, touching upon cost externalization, human identity, intellectual property, employment law, and global wage equalization.

Introduction

Is this Baby Robot a Boy or a Girl?

|

|

|

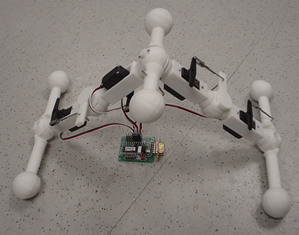

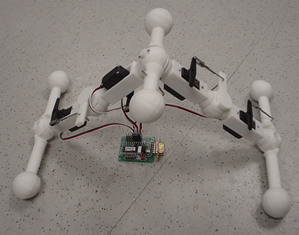

A young visitor recently came into my lab to see some of our automatically designed and manufactured robots and asked, "Is that robot a boy or a girl?"

It is the triumph of literary fantasy over scientific reality that ordinary people would even stop to consider the sex of a robot! Terminator 3 recently starred a fembot more seriously equipped than the militarized mammary shtick in Austin Powers the movie.

But it is truly a silly question! We might as well ask what is the gender of your computer printer? What is the gender of your electric drill, or your toaster? We can put pink or green clothes on an 11-inch plastic shape and call it Barbie or GI Joe, but the sex of dolls and robots is skin deep, reflecting only the fantasy of the observer.

Because the origin of robots is in fantasy literature, from the Golem myths, Frankenstein and RUR through the Asimov novels, to Star Wars, Star Trek, etc, Humanity's expectations about robots are seriously flawed. In one false hope, Robots will be like the Personal Computers of 1980, and obey Moore's law - getting twice as cheap and twice as functional every year - leading to our Jetsonian future. In Wired, Hans Moravec recently heralded the Roomba and other cleaningbots:

"After decades of false starts, the industry is finally taking off. I see all the signs of a vigorous, competitive industry. I really feel this time for sure we'll have an exponentially growing robot industry."

The PR for launch of a new VC-funded robotics company in 2002: "Robots are absolutely going to have a big impact on our lives over the next 20 years," predicts Bill Gross, the founder of prominent technology incubator IdeaLabs. "It's like the PC industry in 1981."

It uses exactly the same PR as the hobby robotics company launched by Nolan Bushnell, the founder of Atari, in 1985 . Just as Nobel laureates think they can solve the problem of consciousness, so successful entrepreneurs think they can take on AI .

As anyone playing with Lego robots will attest, each tiny hardware design change can trigger a giant software re-engineering effort, 24 hours of programming for each hour of playing with blocks. Why do investors fall for claims that Moore's law is surely going to work this time, when there is no evidence that Moore's law has ever helped with a software engineering problem?

While we have not seen any robots-which-look-like-literary-robots hit the big time, we have seen exponential growth in sales of robotic devices when they offer a ROI, either in entertainment value or labor-saving value. But this exponential growth is far different from the Biblical imperative to be fruitful and multiply. The very idea of robots fruitfully multiplying raises the false fear of the singularity, that magical moment machines may instantly turn smart enough and shrewd enough to disobey Asimov's Laws to rise up to wipe out humanity.

Isaac Asimov's Hippocratic-oath

inspired "3 laws of robotics," refreshed in our minds

by Will Smith's IROBOT movie, do not apply until machines really

are sentient. "Do not hurt humans" is meaningless to

today's robot - the ATM or the inkjet printer. My definition

of a robot is a physical machine which interacts with the real

world, is controlled by an algorithmic process (i.e. a program

or circuit) and can operate 24x7 and earn their own return on

investment (ROI), often by putting humans out of work. While

they don't know it is your finger caught in the gears, robots

do hurt: The M&A frenzy of banks, and laying off of tellers,

is directly due to the prevalence of ATM machines. The personal

printer has killed the market for typists.

Isaac Asimov's Hippocratic-oath

inspired "3 laws of robotics," refreshed in our minds

by Will Smith's IROBOT movie, do not apply until machines really

are sentient. "Do not hurt humans" is meaningless to

today's robot - the ATM or the inkjet printer. My definition

of a robot is a physical machine which interacts with the real

world, is controlled by an algorithmic process (i.e. a program

or circuit) and can operate 24x7 and earn their own return on

investment (ROI), often by putting humans out of work. While

they don't know it is your finger caught in the gears, robots

do hurt: The M&A frenzy of banks, and laying off of tellers,

is directly due to the prevalence of ATM machines. The personal

printer has killed the market for typists.

Robots are just tools, not people. They are already here, and they will make progress as long as they provide a positive ROI. A machine is sold whenever a customer judges its value to be greater than its cost. Automation already exists for every manufacturing industry, where the value of a $100,000 machine or a $3B factory can be proven, such as chip production, pharmaceutical testing, and Disk copying/software packaging. But general purpose automation is still in its infancy.

Because expectations are so high, Human fears about robots are equally flawed. Bill Joy's WIRED article on "out of control" replication sounded a false alarm, making reproducing robots as fearful as Genetically Modified foods interacting with the Biosphere, and germ warfare viruses taking out whole swaths of life. I am very sympathetic to the impact of successful human inventions on Gaia, especially when profits arise through the externalization of hidden costs. But my lab is not making robots which make baby robots which make more baby robots; we are designing industrial technology which can design and make baby toasters and baby electric drills. The drill cannot screw the toaster, then eat your old fax machine to get the chips to make more babies drills and toasters!

Even though there are related labs such as Murata's and Chirjikian's focusing on the theoretical and practical aspects of self-replication, an out of control electromechanical reaction is impossible because the means of production for the parts - chips, sensors, motors, etc, are "high" technology rather than freely available natural resources. A "Terminator" process would require the combined industrial facilities of Exxon for energy, GM for mechanics, Sony for electronics, and Microsoft for software (and luckily they are not all located in California, where Arnold "The Governator" Schwartzenegger rules.)

There are different conceptions of the terminator concept, for example, the singularity, first ascribed to SF writer Vernor Vinge where machines will pass an inflection point, becoming so much more intelligent than people, subjugating us to second class citizens. Ray Kurzweil has written positively about the impact of the singularity . A particularly wide ranging and thoughtful treatise on the potential extreme impact of AI on the future is an e-book by lay scholar Alan "HAL" Keele, called "Heaven or Hell; Its your choice".

However, the "means of production" concept has the power to help separate our fictional fears from reality. A runway industrial process, like automobiles, or Walkmen, or the latest craze, Ipods, is really a cultural loop of consumption, profit, marketing and innovation controlled by humans. Ownership of the means of production in the Middle Ages were granted by the king, but now production is organized under corporate law. As automation proceeds, the means of production can evolve away from centralized factories, to local factories, finally into the small business or home shop. The original printing presses were capital equipment and required many typesetting laborers. Now we have word processors which set virtual type driving $100 printers in every room of the home. The studio for musicians was a major affair, sound proof rooms, the generation of wax masters, and the factories stamping vinyl and printing cardboard boxes. Now $400 PC with a CD burner does the trick. And the Hollywood studios of yore, who invented the "work for hire" doctrine stripping creative people of their fractional equity because they claimed accountants couldn't divide up the loot, are competing against home movies shot on a digicam, edited and distributed by anyone with a DVD burner.

But Nature owns its own vast means of production, called ecosystems,

and unlike the high tech manufacturing in which flaws cause product

returns, the disruption of the chemical factories of Nature can

have larger impact on the food chain, of which we humans are

part.

So, while I think there are more immediate ethical issues for

genetic engineering than genetic programming, there are still

important questions to ask about robotics research in particular

and "intelligent" technology in general, and I will

describe 7 issues which humans should be discussing based on

lessons from our past, and extrapolations to our future.

From the past, I note human's inability to account for externalization costs of our technology. "Machines which have byproducts" need to be examined before we make a new doomsday machine like the internal combustion engine, whose immense popularity creates a social entitlement to spew carbon monoxide and drill through mountains to lay asphalt.

From the future I note that as automation invades more and more industries, not only will less-skilled labor be impacted, but the traditional division between capital and labor, between owners and workers, between fixed and variable costs, and across geographical regions will collapse. In the future of automation, the questions of "ownership" of ideas and products, and the interactions of local labor customs, take on new significance not considered since Karl Marx was erased from western history books.

Seven Questions

I. Should robots be humanoid?

Marge Piercy explored a "taboo" on putting AI into human form in her book "He, She, and It". The fundamental law of this retelling of the Golem legend is that its OK to have sentient software and android pets, but there must be a legal restriction on pouring AI into humanoid form. In the book, all hell breaks lose over civil rights and sexual mores when a technokibbutz violates the law.

My take is that we are so very far from sentience so it is not yet a problem. Humanoid robots capture the imagination but never produce any ROI. They exist only for PR sake now, displaying technological prowess so consumers will believe that Honda and Sony's other products are superior. So - and this may be the brilliance of MIT's Media Lab - instead of seeking research dollars from thin engineering budgets, they should always be sought from fat marketing budgets.

We could set up a national "moon shot" program to build a humanoid robot before Japan. However there are serious concerns with the utility of a humanoid robot, even disregarding the fact that the brain for these robots is nowhere on the research horizon. For every task one can conceive of a humanoid robot performing, such as pushing a vacuum, or driving a car, or dialing a telephone, or fighting a war, there is simpler automation, such as random walks, cruise-control, touchtone, and smart bombs in which the physical accoutrement created for human control does not need to be maintained to accomplish the job.

I think that there will be humanoid robots some day, but they won't be sentient, they will be expertly trained sales puppets. I think that 5-10 years after webbots earn an ROI, there will be physical instantiations of these web-bots. To explain, a webbot is a sort of animated graphic face which engages website visitors in a script. The first and second generation of these talking heads companies have already gone bankrupt, except for Ananova, who is subsidized to deliver news. But imagine visiting a website in 2010, where an animated face using subtle expressions, emotional text-to-speech scripting and speech recognition is wildly successful at selling you life insurance, or investments, or books.

Now, when the simulation of a salesperson is so good, that humanity overcomes its revulsion and pushes the BUY button, then it will be WORTH porting that software onto mechanical robot bodies which hang around in Automobile and Furniture showrooms and answer all your questions patiently, find common interests, go and talk to the manager about a better discount on your behalf, and subtly bully you with tightly coiled physical strength, just enough to get the sale closed. Good Salespeople are so hard to find, we will have to make them. So, the biggest risk of humanoid robots is they become door-to-door walking spambots, selling snake-oil and worthless investments.

II. Should we become robots?

We are nearing an age where humans and computers may be intimately

connected, where instead of using touch and vision, we have direct

neural interfaces, in two directions.

In the output direction, we may be able to quickly project a sequence of mental states which a set of sensors and a trained personalized software could reliably classify into a sequence of symbols.

This is not "mind reading" but accurate classification of projected brain states. After reading about work where biofeedback from a video "cursor" control enabled human subjects to control a computer cursor, I designed an experiment in 1992 with Michael Torrello at the Ohio State University, where we tried to use biofeedback and neural networks to let a human train to produce a set of 32 different mental states which could be reliably read and classified by a computer. The 32 thoughts were 16 opposite pairs which might activate specific cortical areas and be picked up by surface EEG electrodes, like "hot/cold" "black/white" "happy/sad" "smooth/rough" "perfume/manure". If we could achieve 5 Bits, 5 times a second, it would be would be a 'mental typewriter' competitive with the manual keyboard, but much quieter.

In the input direction, sensory or analytic data from the world may be spatially or temporally formatted in such a way that a population of neurons could learn to "see" the data as an image inside our head, a technological version of the Cartesian Theatre.

With any technology there are good and bad uses. A good use of output technology is to provide paraplegics with the ability to control their wheelchair or their environment. There are very profitable and world changing possibilities because a stream of symbols from the brain could be used to present phonemes instead of letters, and could be hooked up to a personalized text-to-speech generator tuned to sound just like ME! Hooked up to a cellular phone which uses bone-conductance speaker, it would create the technological equivalence to "telepathy" (silent shared conversation at a distance). I open my mental phone, dial your and hold a conversation silently. It is not so far off.

A good use of input technology is to cure blindness or deafness, or to enable people to access encyclopedic information available on the net faster than visually.

But a bad use of brain input technology is to control rather than inform an animal brain. This dark side of cyborgization was foreseen by early pioneers like Norbert Weiner and Joe Weizenbaum. The robotics problem was so hard, that early proponents seriously suggested "scooping" out the brains of cats, and controlling cheaply reproduced bodies using cybernetic circuitry. A few years ago, a beetle was steered using external mechanism stimulating its antennae. More recently a "cute" demo placed electrodes in brain of a rat, and then conditioned the rat with pleasure when they responded to control signals by turning left and right correctly. After training, the ratbot can be "steered" by radio remote control like an RC car. Normally, we could expect the Catholic Church to have protested this as crime against life, but they were somewhat busy at the time.

Does bio control have positive applications in stopping brain malfunctions such as mania or epilepsy? Perhaps, but there are negative outcomes to bio control which we can easily predict. While these are rough experiments which replace sounds or light of classical conditioning with implanted electrodes and external circuit boards, continuing this path will lead to implants with integrated circuits implanted earlier and earlier in the developmental cycle of an animal, requiring less and less training as the circuit gets closer to the controls over instinct. And, if we can scoop out animal brains, we will be able to scoop out human brains to get robots with opposable thumbs. Finally, the military advantage of controlled animals in a wartime situation is very temporary; the simple countermeasure is species-cide, where military doctrine calls for killing all animals out of fear that one kind may be spying.

III. Should Robots Excrete By-products?

One of the things to watch out for in any new industry is when profits are made through a sustained negative contribution to the environment. When cars were invented, no one imagined that tens of millions of them would go around spewing carbon monoxide into the atmosphere. A different engine design is one of the highest priorities to reduce global warming. Today's robots - ATM's and InkJet Printers only generate paper and empty cartridges.

If a popular robot was made with an internal combustion engine, running on gasoline like a lawnmower, when it achieved success in the marketplace, we could expect another 10M devices spewing filth. While the Segway doesn't yet have the price/performance of a bicycle, at least it is clean! Note to Mr. Kamen: we are waiting for that hydrogen powered sterling engine it was supposed to be!

IV. Should Robots Eat?

Friends of mine who were at University of Western England proposed building robots for agricultural use which would get their power by combusting insects. Not only would it help weed and harvest, but it would take care of your grub problem too, working for months without refueling. University of South Florida is doing it as well.

The robot power problem is significant, and using insects for food is certainly a novel suggestion. Most laboratory robots run on rechargeable batteries with worse life than a laptop. In our lab, we built a special power floor with metal tape and pickups on the bottom of the robots to be able to run for days, so robots could learn in their actual environment.

But the long term impact of a successful robotic industry powered by biological food is somewhat negative, as, if they exponentially multiply, we begin to compete with our own technological progeny for subsistence. The answer must be solar, hydrogen, or wind, not artificial bio-digestion.

V. Should robots carry weapons?

Every year, in order to justify some of its R&D programs

into robotics, the

Defense Department creates scenarios where intelligent robots

would aid or replace human soldiers on the battlefield of the

future.

We need to make a clear distinction between armed telerobots where the fire button is pressed by a human within a chain of command, and an autonomous weapon which chooses when to fire itself. Landmines are the prototype of autonomous robot weaponry, and are already a blight on the world.

Given that the psychological age of an autonomous robot is under 1 year old, it seems like quite a bad idea to equip them with guns. First, giving automatic weapons to babies is not something a civilized country would do. In the long term, by removing the cost of war in "our boys," we raise the specter of "adventure for adventure's sake," which would be a net downgrade in human civilization back to the days of barbarians.

First I will be explicit about my interests here: DON'T CUT MILITARY FUNDING FOR ROBOTS!!! Because there are many useful roles for walking, running, flying, and swimming robots in the military, including mapping, de-mining, cleanup, surveillance, and schlepping. Shlepping is a Yiddish word for delivering supplies. Robots can help avoid making heroes out of people ambushed while schlepping. But to avoid embarrassing episodes of electronic friendly fire, perhaps there should be a 10-year moratorium on autonomous army-bots carrying weapons. This moratorium could renew every decade until either some new ideological enemy does it first, or a staff psychologist certifies the robots to have the psychological age of say 13, when boys are drafted in third world civil wars.

VI. Should Robots import Human

Brains?

Here I am not referring to the idea of achieving immortality by "pouring" one's brain into a robotic body (rather than cloning yourself and transplanting your brain). I am talking about something remotely possible.

A Telerobot is a machine controlled by a human, like an R/C (remote control) car or model airplane. But the telerobot should not be operating within the human's visual range. To be a "tele" the robot should be distant, and the human, using joysticks, or wearing body sensors and gloves, controls the robot by wire, e.g. across the Ethernet. Video cameras on the telerobot transmit visual information back to the human. The human controller can move the telerobot, see its environment, and even send voice across the wire.

Telerobotics is really a form of sophisticated puppetry. In fact, such machines shouldn't be called robots at all, but electronic puppets. Nevertheless, they are quite useful. Surgeons in big cities can teleoperate in rural areas, lowering the cost and replication in healthcare infrastructure. The military can use teleoperated flying drones for surveillance of hostile areas. Telerobots can be used in industrial and nuclear spill cleanup. NASA has invested in a very sophisticated humanoid puppet called the cybernaut for spacewalks.

The good side of telerobotics is clear. But there is a dark side. There is much talk about outsourcing of high-tech jobs to places like India and Russia, where skilled white-collar workers are available for low wages. America has lost a lot of jobs, yet even as the stock market climbs, the job market remains soft, because the new jobs are created cheaper overseas.

However, this exportation of jobs is nothing compared to the impact of the importation of brains, which becomes possible when Telerobotics and Broadband meet.

I was reading the other day about how the Republican

Party was using call centers in India for fundraising . The

overseas call center staff speaks very good English, yet each

worker only makes $5 a day. Now consider a Humanoid Telerobot

using WIFI instead of an Ethernet cable. For, say $10,000 for

the robot body, and $10/day for the overseas operator, we can

bring our factories back from China, but keep using Chinese workers!

We can have programmers from India virtually present in cubicles

in Boston!

Our new factory workers (and eventually our domestic servants, farmworkers, and schoolteachers) will be telerobots inhabited by people happy to make $5/day. So for $10,000 and $10/day, a robot in your house, with a brain imported from a Third-world call center, will be able to wash dishes, vacuum the floor and even tutor your children in the Kings English.

Broadband plus Telerobotics is thus the ultimate in globalization and wage equalization. But should local business ethics, employment standards, and labor laws apply to overseas workers telecommuting into robot bodies located in the US? And after equalization, what high paying work will be left for humans? Inventing?

VII. Should Robots be awarded patents?

Lest you think inventing is a human activity unattainable to machines, unfortunately, we already see machine learning techniques capable of invention. And worse, our laws don't make it clear who owns something invented by a computer program.

When software patents were being considered, I pointed out in a letter to the PTO that software patents could render AI illegal. No law on intellectual property can restrict a human from holding and using knowledge inside their head. If a human acts like someone's patented machine or process, so it goes. But if a robot takes on a patented process, it violates the government- sanctioned monopoly. If I sell an intelligent machine which learns to do the same thing as a machine you've patented, then you may sue me for selling a machine which learns to violate your patent!

With respect to computer discovery and invention, in the 25 years since Doug Lenat's AM, which claimed to be discovering mathematics, but was really organizing mathematical regularities built into the LISP language, we have seen increasing invention and basic creative arts accomplished by machine. We have seen simple circuits rediscovered with massive computing by Koza, novel Antenna designs, and mechanisms such as the rachet, triangle, and cantilever discovered in our own evolutionary robotics work. However, this software is not yet aware of - or capable of - reusing its own inventions. Computer optimization may discover more compact forms of existing mechanisms, like sorting networks and circuit layouts, which technically are worth a patent, but such optimization is not the high form of invention that threatens humanity's self-esteem.

But given a patentable discovery, should the robot get the patent? Or should the funding agaency who sponsored the research, or the builder of the robot software (e.g. me or my student), the owner of the computer it runs on (The university), the person who supplies the problem to be solved, etc. get the patent for what my robot creates? If the robot invents something while working in your factory, should you get the patent? Should robots have to consult the patent and trademark database before making a move to avoid rediscovering known IP? If so, it will be a Shaky robot for sure.

My solution to this AI-creates-IP problem is to resist the Mammonistic temptation to build a patenting machine and claim its results as my own. However, I would like to be able to sell robots who can adapt and invent processes necessary for their own continued employment. How do we balance the rights of human inventors and AI?

Patents hinge on the notion of "non-obviousness" which has a technical meaning that loosely translates as "you can't patent a device or process that workers in a field would create in their ordinary employment." For example, a sharpening or measuring jig which all woodworkers invent in practice cannot be excluded by a later patent.

I believe that as our machine learning and discovery systems improve, and climb the scale of invention from simple geometric forms to novel circuits and systems, their outputs should gradually invalidate existing patents from the simpler range of human invention, pushing non-obviousness to a higher level of creativity - "cannot be discovered by computer search process." I submit this notion called "obviousness in retrospect" defined as when a machine rediscovers a human invention, should be adopted in the future by the PTO.

Conclusion: The future

of automation and human wealth.

The fears of robots getting out of control is expressed often in fiction and movies like the Terminator films. And there is a robot reproducing out of control right now! According to my definition, the CD Recorder is a robot, as it has an effect in the real world, it is controlled by algorithmic processes, and is operating 24x7 to put some people out of work. In this case, the people were employed as intermediaries between musicians and their audiences. These CDR's and (soon DVDR's) are really home recording robots, and when supplied with information by peer-to-peer networks sharing popular cultural information, are a significant threat to the powerful Hollywood publishing industry which still thinks it is manufacturing vinyl and tape, rather than licenses to IP.

Even so, a CD burner is really not much of a threat to society considering what the future holds! Home fabrication devices will eventually approach the fidelity of Star Trek's 'Replicator". Instead of an invasion of humanoid robot slaves, we will instead see a gradual evolution from the inkjet printer (a writing robot) and the CD Burner (a recording robot) to 3D printers which fabricate in a single plastic material to more complex and integrated forms of general purpose automated manufacturing. Soon there will be a "Fab" in your neighborhood able to automatically manufacture one-of-a-kind products comprising both mechanical and electronic components using plastics, metals, and circuits. Local Fabs and then household replicators are likely to recapitulate human craft history - with automanufacture of wood, ceramics, glass, and metal objects first, beyond the single-material fabs available today from several companies. Yet over time, the complexity of what can be automatically manufactured with "mass customization" will increase, while the scale of what can be automatically produced will shrink from the macro through the meso and micro scale until a general purpose autofabrication system becomes a natural outgrowth of existing industrial processes.

But there is a downside to this potential end of scarcity. Consider a future where not only the bits comprising books and records are digitalized, but also the bits which specify the blueprints for all designed objects. Houses, cars, furniture, appliances, etc. will all be digitalized as well. With modern CAD tools, we are well on the way. What is lacking is the general purpose automation and standards necessary for the broad adoption of custom manufactured goods.

Its 2045. Ford Motor Design company no longer owns manufacturing plants. Because you previously owned a Ford car, you get DVD in the mail containing the blueprint for the 2045 T-bird. Besides the blueprint, the DVD is enhanced with some cool multimedia advertisements and other material related to the T-bird's history and current bio's of the star design team. Well, you get this DVD in the mail, but you don't OWN the blueprint, Ford does. And you don't have a car yet! But if you decide to purchase the car, what you actually are paying for is just the "right to have molecules arranged in the pattern" specified by the blueprint. So, you take it to a local Fab, insert the disk, and 24 hours later, without any US factory workers, your new car rolls out the other end.

The factory is so efficient that you pay $10k for the raw materials and energy dissipated. Ford gets the other $30,000 for the licensed design. Its very lucrative because, I forgot to tell you, your "license" to keep matter in the form of the T-birg expires in 36 months, at which point the car falls apart and you are left with a ton of raw material.

By this time, Ford has laid everyone off except for the 10 engineers designing the blueprint. The company sells a million licenses a year, making 30 billion dollars on a "pure IP" play while only paying a few million in salary. It has won the race to the top of the list of companies rated by "RPE" or "revenue per employee." (Remember the good old days when companies were ranked by how many employees with families they supported?)

When a company produces only IP licenses, and when jobs are

taken by telerobotically imported tele-slaves, we will come to

the core ethical questions of the age of automation.

We have to examine whether the system of "work-for-hire,"

invented in Hollywood before the age of computers is actually

fair going forward. Employers like to own all the IP created

by employees; but employees have become more expendable. The

engineers and designers who put together the blueprint for that

2045 Thunderbird, just like the programmers who built valuable

early software, like other designers and engineers in America

under existing employment law, can be fired at will, forcibly

bequeathing their IP to their employer. Thus, employees may no

longer settle for a salary, but will quit and form startups to

share in the equity of what they create.

It is only design so instead of buying a ford, you can buy an

advanced CAD tool and component licenses, and design your own

car at home, and only pay for the materials. But of course Fords

are much cooler, or they would have been out of business like

an early video game company.

Perhaps the dot-com gilded age was an illusion on the surface of a bubble. Certainly investment bankers and financial journalists were using mass psychological mind control techniques to get people to foolish buy unproven stock issues. There was acid in the Kool-aid they were serving their investors, and then they started drinking it themselves. The idea that a company could be acquired for millions of non-paying customers who were acquired by giving away investors money, well, it was quite insane. Nevertheless, there is a fundamental truth to the generation of new wealth via the new network. When a book or a song becomes a best-seller (which people buy), it can make the publisher wealthy, and with proper royalty agreement, the author as well. Similarly, a software or information product can be reproduced exponentially, making wealth for its publisher as well. So the promise of websites which can earn revenue through popular adoption like books or packaged software remains valid.

One way to view the DotCom boom was as a glimpse of a future in which authors formerly known as employees - even graphic designers, technical writers, and office clerks - own fractional equity in the valuable cultural products created by the organizations they participated in. I think the Dot-com age was actually a glimpse of a positive future, a new phase of work history enabled by the lowered cost of the means of production. Human work relationships have evolved - from slavery to serfdom to pieceworker to hourly laborer to salaryman. The next phase is author/equity-holder.

Unfortunately, the radical free-software movement believes

that once a blueprint (for software, music, or even a car) is

digitalized, it

should be shared freely with everyone in the world because

it can be. We know that any book or song can be scanned and released

on the Internet P2P network and can be delivered to the omnipresent

home robots of computer printers and CD burners. Even a 700 page

book can have each page legally scanned - under the fair-use

doctrine - by a different person, who puts up the page on their

website along with a critical review. Now a third party builds

a search engine which finds and organizes an index to each scanned

page.

Should all information be shared freely because it can be? Should

every best-selling book be "freed?" The Star Trek replicator

leads to an economic dilemma:

If nobody gets royalties because they gave their IP to their companies under work-for-hire rules before changing jobs, or because they were convinced to donate it to the commons, or it was simply "freed" by electronic robin hoods, then who will organize the R&D resulting in the complex things we want replicated? As automation becomes more and more prevalent, we will be faced with the question Gene Roddenberry never had to answer: Who gets the royalties when a replicator replicates? Finally, in the age of automation, who will have an income to even buy the stuff our robotic replicators can manufacture?

As a positivist, I think that we can and must devise adaptive markets for recognizing and trading equity in the cultural objects selected for replication. I wrote about this in 1997 as a solution to the Microsoft Monopoly and Software piracy problems, well before music downloading became an industry and youth ethics crisis. A securities market that provides transparency in pricing and volume. A market can regulate fractional IP rights and enables a broad middle class of property owners. Joint and publicly shared ownership in IP is necessary to reward the highest levels of human creativity, especially as basic levels of work are made redundant by global wage equalization and the gradual evolution of intelligent technologies.